EEG/ERP rounding event latencies

22.10.2019 Edit: Thanks Matt Craddock, I understand the source of the problem better. He mentioned that this should not occur if the amplifiers record the triggers as trigger channels (before converting it to events). And mentions that this could happen through downsampling. Indeed after checking in the dataset I used it was downsampled from 1024 to 512Hz. This made many eventlatencies ~ X.50001, which will be uprounded with round and floored with floor. This gives some context to the problem.

Full discussion on twitter

TLDR; EEGlab allows for non-integer event latencies (in units of samples). Eeglab chose floor(latency), while others e.g. unfold & fieldtrip choose round(latency) to round the latency to samples. This leads to differences between toolboxes, in my example of up to 1.5µV (or ~25% ERP magnitude). Importantly, this probably does not introduce bias between conditions

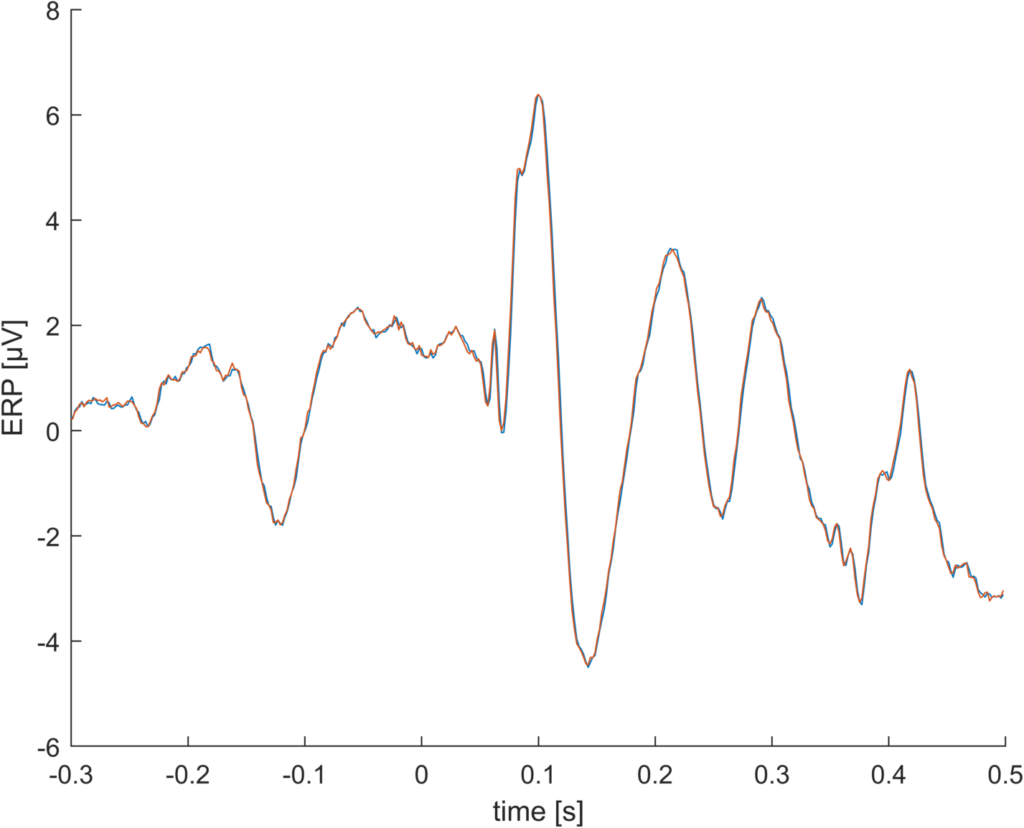

This is an ERP, actually its two ERPs. One is calculated using the “unfold” toolbox and one using eeglab’s pop_epoch function

If you look very closely, you can see that they are not identical, even though they should be. So – whats the difference?

It turns out that EEGlab saves event latencies in samples (e.g. stimulus is starting at sample 213), but also allows non-integer latencies (e.g. stimulus is starting between 212 and 213, to be exact: at sample 212.7). This makes sense, i.e. if your EEG sampling resolution is 100Hz you might know your stimulus onset with higher precision and not in 10ms bins. But in order to get ERPs we have to “cut” the signal at the event onset. EEGlab uses “floor” for this and rounds the stimulus onset from 212.7 to sample 212. Other toolboxes (unfold / fieldtrip) use “round”, thus the event would start at sample 213.

It turns out that in the example you see above, this introduces a difference between the two ERPs of 0.5µV (!) thats around 8% of the magnitude.

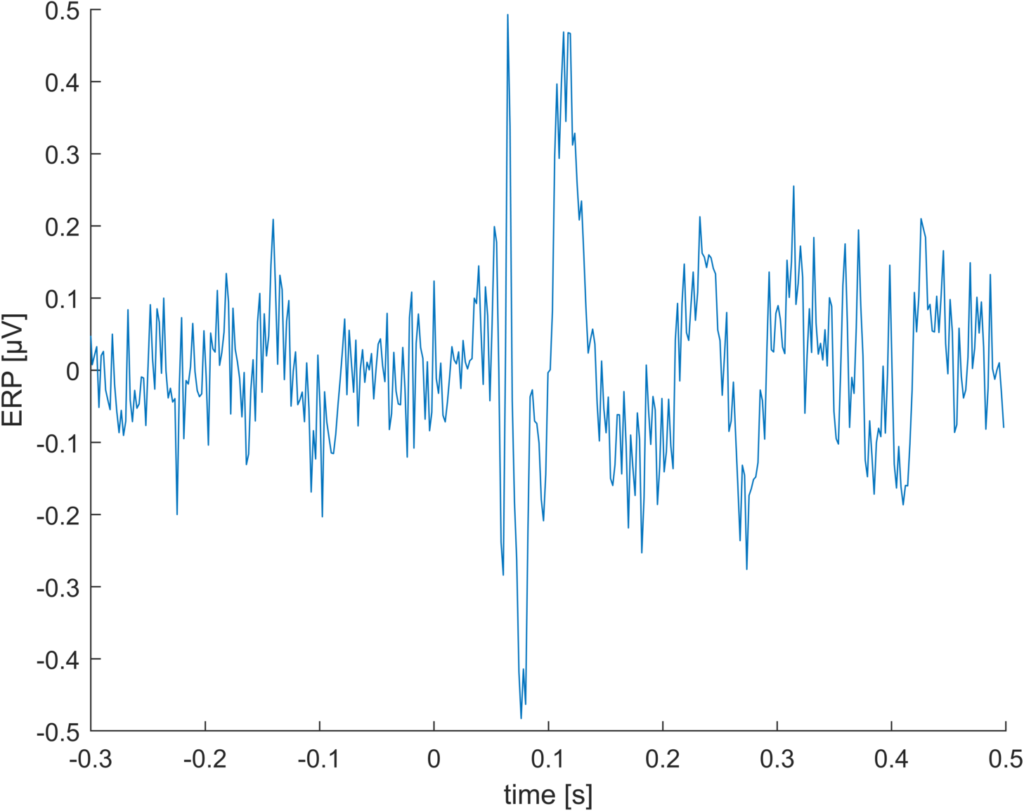

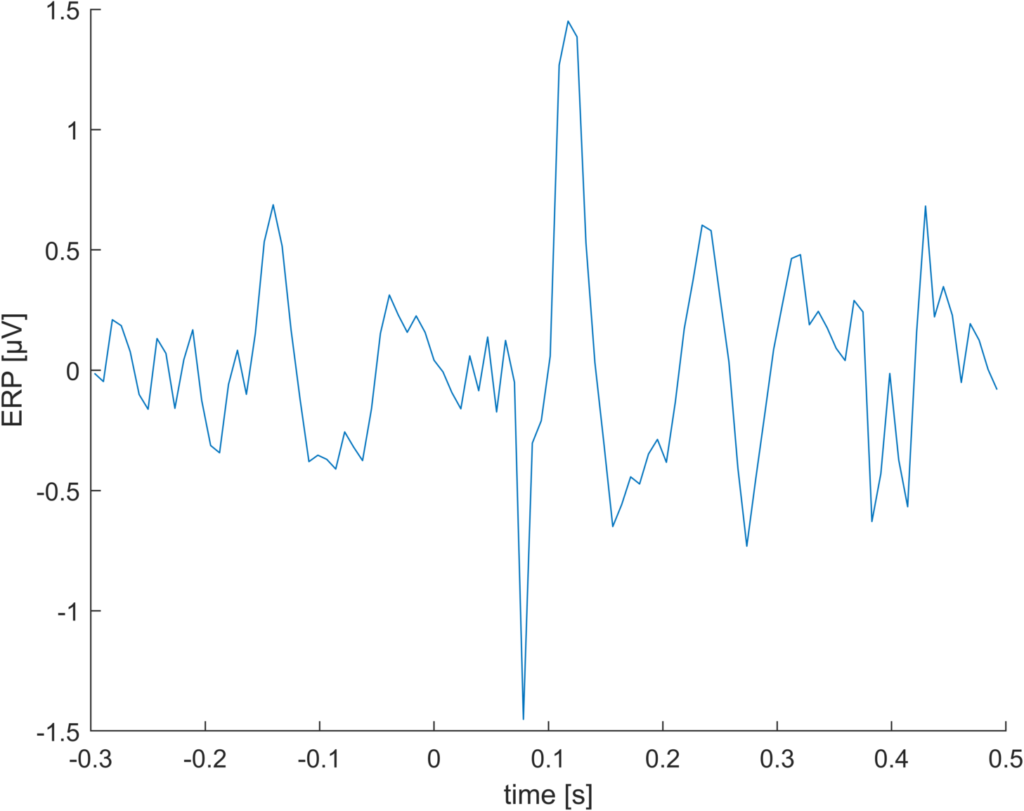

This is just a random example I stumbled upon. With lower sampling rates, this effect should increase. Indeed, downsampling to 128 Hz gives us a whopping difference of 1.5µV.

Floor vs. round (vs. others?)

The benefit of floor, at least the one I can think of, is that it would never shift the onset of a stimulus in the future. That is, it is causal. Possibly there are other benefits I am not aware of.

The benefit of round is that it more accurately reflects the actual stimulus onset. Possibly there are other benefits I am not aware of

Given that we mostly use acausal filtering anyway, I think the causal benefit is not very strong.

There is yet another alternative: a weighted average between samples. We could “split” the event onset to two samples, i.e. if we want the instantaneous stim-onset response, and stim onset is at sample 12.3, then sample 12 should be weighted 30% and sample 13 by 70%. I have to explore this idea a bit more, but I think its very easy to implement in unfold and test. But this for a new blogpost.

The big picture

In the fMRI community there are papers from time to time reporting that different analysis tools (or versions) lead to different results. I am not aware of any such paper in the EEG community (if you know one, let me know please!) but I think it would be nice if somebody would do such comparisons.

I currently do not forsee if such an event-latency-rounding difference could possibly introduce bias in condition differences. But I forsee that changing it will be difficult for the EEGlab developers, as “floor” has been around for a very long time in eeglab.

Code

Note that I did not use simulation here (but could have, it should be straight) but I cannot publicly share the data at this point in time.

load('EEG_subj8_inkl_ufresult.mat')

%%

%EEG = pop_resample(EEG,128);

timelimits = [-.3 .5];

EEG = uf_designmat(EEG,'eventtypes','stimulus','formula','y~1');

%deconv based "round" analysis

EEG = uf_timeexpandDesignmat(EEG,'timelimits',timelimits);

EEG = uf_glmfit(EEG,'channel',63);

%eeglab based "floor" analysis

EEGe = pop_epoch(EEG,{'stimulus'},timelimits);

ufresult = uf_condense(EEGe);

%% ERP plot

figure

plot(ufresult.times,mean(EEGe.data(63,:,:),3)) %floor

hold on

plot(ufresult.times,ufresult.beta(63,:,1)) %round

xlim(timelimits)

xlabel('time [s]')

ylabel('ERP [µV]')

box off

export_fig erp.png -m3 -transparent

%% difference plot

figure

title('Diff "floor"-"round"')

plot(ufresult.times,mean(EEGe.data(63,:,:),3)-ufresult.beta(63,:,1)) %floor-round

xlim(timelimits)

xlabel('time [s]')

ylabel('ERP [µV]')

box off

export_fig diff.png -m3 -transparent

When an EEG sample at time t is acquired, say at a frequency s, the EEG data collected corresponding to the data from time t-1/s to time t. It does not correspond to data from time t-1/(2s) to t+1/(2s). This is the reason why EEGLAB uses the floor function which corresponds to using the round of event latency minus 1/(2s) – after conversion to samples.

Not sure I understand.

Shouldn’t eeglab use ceil then? I.e. if something happens at sample 0.6, the accompanying EEG-sample is sample “1”, something happening at 1.2, the EEG-sample should be “2”.

It is still a inconsistency with the other toolboxes as far as I can see. Would you agree with this?

PS: Sorry for the slow moderation of comments, I get so much spam (even though recaptcha) – I will fix that soon.