Visual Category Learning

We observe learning of novel classes of perceptual stimuli in an M/EEG study over more than 20 sessions.

Authors

Introduction

Everyday we use categories to interpret and act on our environment. Of course, this is necessary as we have to discriminate for example edible from poisonous food or friend from foe. Such representations are involuntarily and immediately accessible to our consciousness. How do new categories emerge in our brain? One way to study human category behaviour is to train humans to learn new classes by presenting different stimuli with some kind of feedback and analyse their behaviour. In addition to observing patterns of behaviour, electroencephalography (EEG) can be used to study the evoked electrical changes by different category based tasks in the brain.

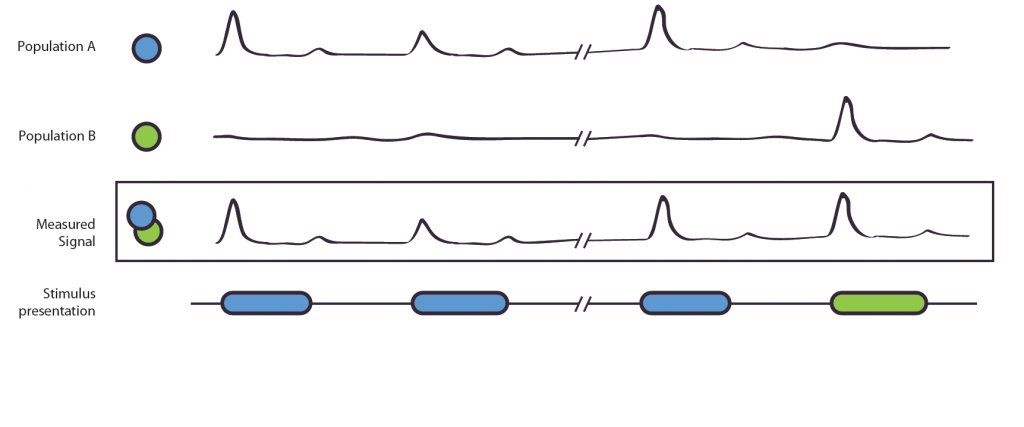

Due to low level confounds, we see that a categorizing process into two categories is hard to distinguish from other neuronal processes. Therefore we need to find another paradigm to examine this process. We use an adaptation approach (c.f. Grill-Spector, Henson and Martin (2006)). This adaptation effect is used in various contexts, for example in fMRI, to distinguish different neuronal populations (Krekelberg, Boynton, van Wezel. 2006) or, as in our experiment, as a measurement of category membership.

Figure 1. The Adaptation Effect. The measured signal, e.g. local field potential or EEG-signal, results from the combined signal of entangled neuronal populations/networks A and B. Due to poor spatial resolution and the entanglement, it is not possible to measure the populations alone. Population A is selectively active to the blue stimulus, B to the green. If shown two blue stimuli, population A shows a reduced activation, this is also shown in the measured signal. In the second case, population A reacts to the blue stimulus, population B to the green stimulus. As their original, not observable activity is independent, no adaptation effect occurs. The measured signal does not show a reduction of strength.

We postulate that in an adaptation task, adaptation-release is higher when two subsequent stimuli are different in their class membership than when their class membership is the same. We hypothesize to find these different activations, indicating a sharpening of neuronal populations to the target stimuli and extraction of class features, in areas in the fusiform gyrus and LOC, but not in PFC.

Methods

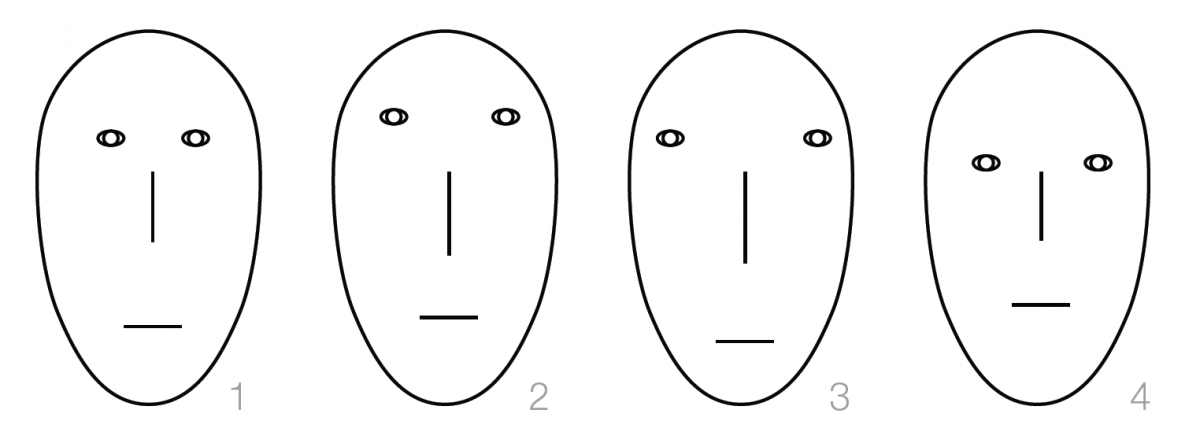

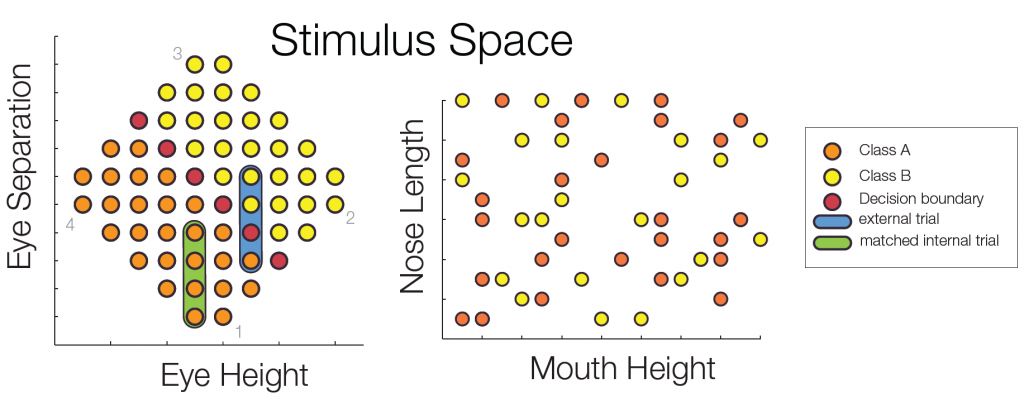

The decision boundary was rotated by 90° for every other subject. Importantly, the stimulus space was controlled for low level confounds. Only the combination of two parametric feature dimensions could be used for class discrimination, while two additional dimensions were irrelevant for the classification task.

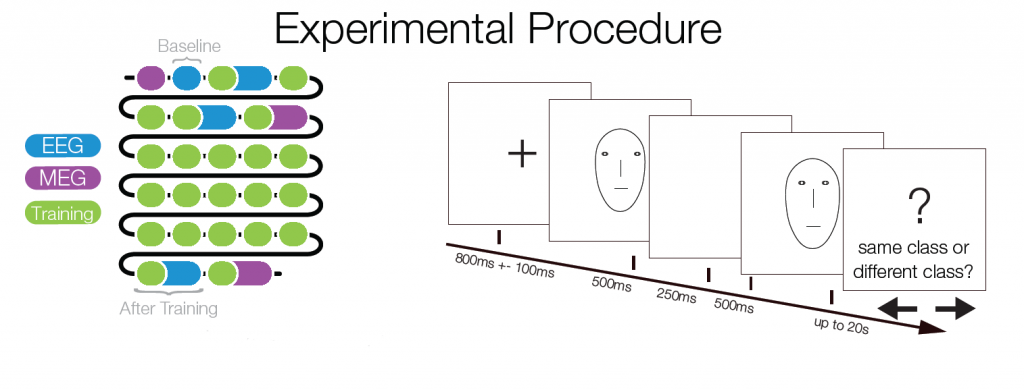

Subjects trained to distinguish the two classes during 22 sessions. At several points of time an EEGadaptation procedure (similar to fMRI-adaptation) was used. With this, it can be tested whether and where repeated presentations of a stimulus category (as opposed to showing two different categories) leads to a smaller ERP amplitude. Such a reduction can be used as a marker of category selectivity in the underlying neuronal population. Importantly, class Internal and external trials were matched to control for low level similarity which excludes potential low level confounds.

EEG-Preprocessing: EEG-Data was visually cleaned. ICA was used to exclude eyecomponents. ERP data was bandpath

filtered between 1Hz and 50Hz. Baseline was -1450ms to -750ms before second stimulus onset. ERPs were based on 3005 and 3012 trials, for the internal and external conditions. Peak-ROI 80ms to 160ms.

Results

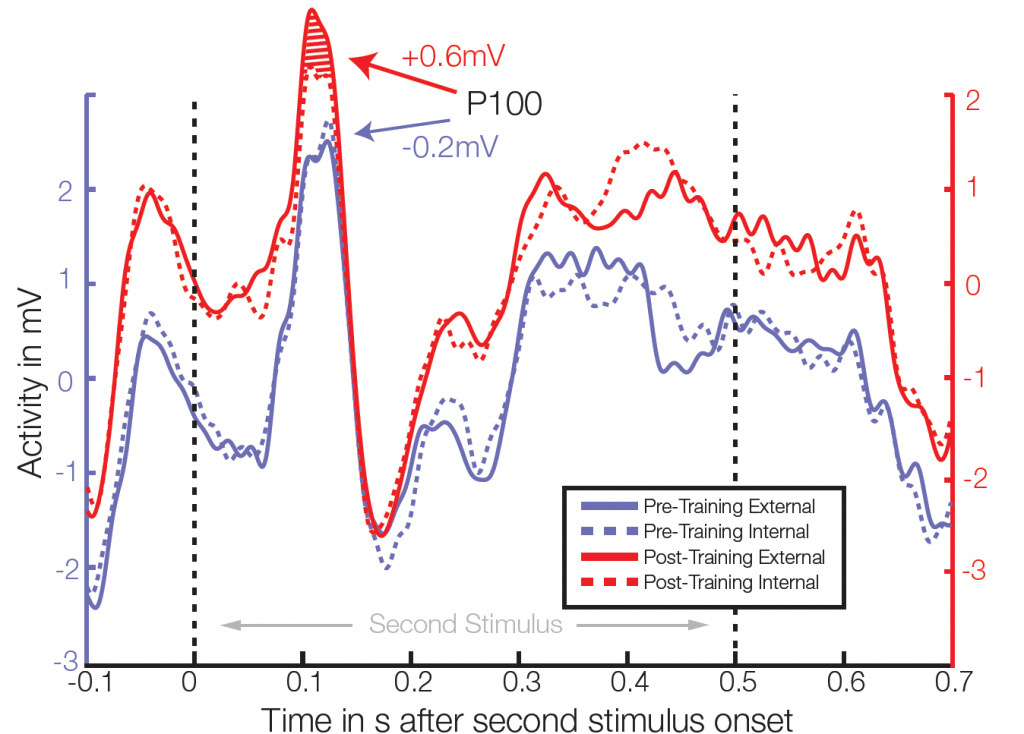

Average ERP of 4 electrodes in right temporal/occipital area. After training, significant differences between class external and internal conditions were found in the P100 amplitude in 3 out of 64 electrodes (t-test, individual p<0.006, FDR corrected for multiple comparisons q=0.05).

Average ERP of 4 electrodes in right temporal/occipital area. After training, significant differences between class external and internal conditions were found in the P100 amplitude in 3 out of 64 electrodes (t-test, individual p<0.006, FDR corrected for multiple comparisons q=0.05).

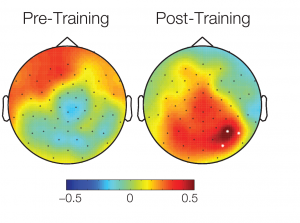

The topographical plot of differences in conditions between activations over all subjects 90ms – 120ms after stimulus onset shows a cluster of significant electrodes in an temporal area. White dots: significant electrodes.

Discussion

Here we show that extensive learning of two visual categories, not confounded by low-level stimulus properties, leads to significant patterns of category selectivity in occipitotemporal cortex only 100ms after stimulus onset.

These effects cannot be explained by low-level stimulus properties, because (I) stimulus space and experimental conditions were strictly controlled to have the same low level information (not a single feature dimension is sufficient for categorization and class external and internal conditions were matched for low level differences) (II) the baseline, prior to training, showed no significant effects. Moreover, our results cannot be explained based on motor-artifacts, as the finger-response mapping was alternated during the experimental session.

The timing of the found effect questions the suggested necessary involvement of feedback from lateral prefrontal cortex, at least for highly trained subjects. This view is in line with a recent monkey lesion study by Minamimoto et al.

Summing up, our results are best explained by a model in which perceptual category information is extracted in a feed-forward manner, and not by a frontal mechanism acting top-down.

References

Jiang, X., Bradley, E., Rini, R. A., Zeffiro, T., Vanmeter, J., & Riesenhuber, M. (2007). Categorization training results in shape- and category-selective human neural plasticity. Neuron, 53(6), 891-903.

Liu, J., Harris, A., & Kanwisher, N. (2002). Stages of processing in face perception: an MEG study. Nature Neuroscience, 5(9), 910-6.

Sigala, N., & Logothetis, N. K. (2002). Visual categorization shapes feature selectivity in the primate temporal cortex. Nature, 415(6869), 318-20.

Riesenhuber, M, & Poggio, T. (2000). Models of object recognition. Nature neuroscience, 3 Suppl, 1199-204.

Minamimoto, T., Saunders, R. C., & Richmond, B. J. (2010). Monkeys Quickly Learn and Generalize Visual Categories without Lateral Prefrontal Cortex. Neuron, 66(4), 501-507

Rossion, B., & Caharel, S. (2011). ERP evidence for the speed of face categorization in the human brain: Disentangling the contribution of low-level visual cues from face perception. Vision research, 51(12), 1297-311.

Kietzmann, T. C., & König, P. (2010). Perceptual learning of parametric face categories leads to the integration of highlevel class-based information but not to high-level pop-out. J Vis, 10(13), 20.